Currently, an active “war” is in progress… one which mainstream technology blogs have dubiously neglected to detail. This ongoing conflict could be construed as a misinformation campaign in what should be named “Battle for the 4K AV Port” This contentious issue will change what pegs are located on back of the TV in a few years. And if you’re thinking about waiting to purchase that new 4K TV, please continue waiting.

Just a short while ago, some may remember we were using analog white, red, and yellow RCA cables to transmit audio & video. Several intermediary steps have propagated video technology forward including VGA, S-Video, YPBPR, DVI, amongst many others. It is widely accepted that HDMI is the current standard for Audio & Video (AV) connections. But now, with the advent of 4K mainstream adoption, a new generation of port is needed to push the higher bandwidth accompanying the increase in pixels. There exist several competing standards at the moment and it would be ignorant to believe they can all win.

CURRENT CONTENDERS

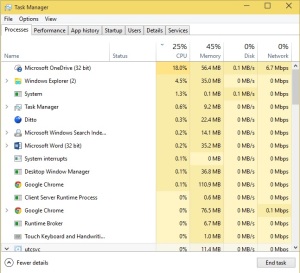

The currently dominant port – HDMI – was founded by several large players in the TV manufacturing and AV components industry including Hitachi, Panasonic, Philips, Sony, Toshiba, Technicolor, and Silicon Image. This group, the HDMI Consortium, licenses the use of HDMI for several fees. There are annual membership dues, along with port fees. In other words, when manufacturer puts an HDMI port on a device, the manufacturer must pay the Consortium a royalty between .04 and .15 USD. When considering the staggering number of devices with multiple HDMI ports, it can be imagined that is a very lucrative business. One of the big problems for HDMI is that their current revision HDMI 2.0, is still very far behind the competition in terms of transmittable bandwidth. Further damning is the slow uptick in adoption by computer and graphics cards manufacturers.

The USB Implementers Forum, the group responsible for licensing USB technology adhere to a similar pricing scheme. The founding members of the USB Implementers Forum comprises Compaq, DEC, IBM, Intel, Microsoft, NEC, and Nortel. USB was originally planned for communication between computing devices. However, in the most current revision, USB 3.1 Type C, the bandwidth and power a USB cable can carry place it as a viable contender to HDMI. This group of computing giants can hold their own in potentially supplanting some of the biggest AV component suppliers in the HDMI Consortium.

Lastly, we have DisplayPort. This technology sprouted from VESA, who was founded by NEC, ATI, Western Digital, and others. DisplayPort charges .20 USD per port. When it was launched in 2008, DisplayPort was magnitudes ahead of the competition. It could carry much greater bandwidth than HDMI at a fraction of the size. Both cables and connectors are small. But alas, USB corrected these issues in their most recent revision. And DisplayPort signals can now be carried over USB 3.1 Type C connections. It is hard to imagine VESA themselves finding a chair at the table.

ROOM FOR ANOTHER?

Although highly unlikely, it is possible for another group to usurp the incumbents. Much has changed in the electronics manufacturing landscape since these cabling technologies were formed decades ago. The power dynamic is distributed to companies who were not as prevalent in the 90s and 00s. Currently, the two biggest producers of TV screens are LG and Samsung, South Korean multinational conglomerates. They are absent from these ruling bodies. Apple can easily be considered the premium device manufacturer at the moment, and their one-man 30-pin and Lightning ports were not adopted by the industry. Google has the largest presence in mobile. Qualcomm is the marquee ARM manufacturer at the moment. AMD is certainly a player, providing the APUs for both Microsoft and Sony’s gaming consoles. NVidia is a powerhouse in the GPU industry. A bevy of other companies would surely sign up if invited.

The fight for the new standard port is dynamic. Unfortunately, it is deprived of the publication it surely deserves.